Videos/A Digital Media Primer For Geeks: Difference between revisions

(more images) |

m (fix Wikipedia) |

||

| (112 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

<small>''Wiki edition''</small> | <small>''Wiki edition''</small> | ||

The program offers a brief history of digital media, a quick summary of the sampling theorem, and myriad details | [[Image:Dmpfg_001.jpg|360px|right]] | ||

This first video from Xiph.Org presents the technical foundations | |||

of modern digital media via a half-hour firehose of information. | |||

One community member called it "a Uni lecture I never got but really wanted." | |||

The program offers a brief history of digital media, a quick summary of the [[Wikipedia:Sampling_theorem|sampling theorem]], | |||

and a myriad of details on low level audio and video characterization and formatting. | |||

It's intended for budding geeks looking to get into video coding, | |||

as well as the technically curious who want to know more about the media they wrangle for work or play. | |||

See also: [[Videos/Digital_Show_and_Tell|Episode 02: Digital Show and Tell]] | |||

<center><font size="+2">[http://www.xiph.org/video/vid1.shtml Download or Watch online]</font></center> | <center><font size="+2">[http://www.xiph.org/video/vid1.shtml Download or Watch online]</font></center> | ||

<br style="clear:both;"/> | <br style="clear:both;"/> | ||

Players supporting [https://www.webmproject.org/ WebM]: [https://www.videolan.org/vlc/ VLC], [https://www.mozilla.com/en-US/firefox/ Firefox], [https://www.chromium.org/Home Chrome], [https://www.opera.com/ Opera], [https://www.webmproject.org/users/ more…] | |||

Players supporting [[Ogg]]/[[Theora]]: [https://www.videolan.org/vlc/ VLC], [https://www.firefox.com/ Firefox], [https://www.opera.com/ Opera], [[TheoraSoftwarePlayers|more…]] | |||

If you're having trouble with playback in a modern browser or player, please visit our [[Playback_Troubleshooting|playback troubleshooting and discussion]] page. | |||

<hr/> | <hr/> | ||

==Introduction== | ==Introduction== | ||

[[Image:Dmpfg_000.jpg|360px|right]] | |||

[[Image:Dmpfg_002.jpg|360px|right]] | |||

<small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Introduction|Discuss this section]]</small> | |||

Workstations and high end personal computers have been able to | Workstations and high-end personal computers have been able to | ||

manipulate digital audio pretty easily for about fifteen years now. | manipulate digital audio pretty easily for about fifteen years now. | ||

It's only been about five years that a decent workstation's been able | It's only been about five years that a decent workstation's been able | ||

| Line 26: | Line 46: | ||

Well good! Because this stuff is a lot of fun! | Well good! Because this stuff is a lot of fun! | ||

It's no problem finding consumers for digital media. But here I'd | |||

It's no problem finding consumers for digital media. But here | |||

like to address the engineers, the mathematicians, the hackers, the | like to address the engineers, the mathematicians, the hackers, the | ||

people who are interested in discovering and making things and | people who are interested in discovering and making things and | ||

| Line 39: | Line 58: | ||

researchers "are the best of the best, so much smarter than anyone | researchers "are the best of the best, so much smarter than anyone | ||

else that their brilliant ideas can't even be understood by mere | else that their brilliant ideas can't even be understood by mere | ||

mortals. This is bunk. | mortals." This is bunk. | ||

Digital audio and video and streaming and compression offer endless | Digital audio and video and streaming and compression offer endless | ||

deep and stimulating mental challenges, just like any other | deep and stimulating mental challenges, just like any other | ||

discipline. It seems elite because so few people have | discipline. It seems elite because so few people have been | ||

involved. So few people have been involved perhaps because so few | involved. So few people have been involved perhaps because so few | ||

people could afford the expensive, special-purpose equipment it | people could afford the expensive, special-purpose equipment it | ||

| Line 57: | Line 76: | ||

Quite a few people watching are going to be way past anything that I'm | Quite a few people watching are going to be way past anything that I'm | ||

talking about, at least for now. On the other hand, I'm probably | talking about, at least for now. On the other hand, I'm probably | ||

going to go too fast for folks who really | going to go too fast for folks who really are brand new to all of | ||

this, so if this is all new, relax. The important thing is to pick out | this, so if this is all new, relax. The important thing is to pick out | ||

any ideas that really grab your imagination. Especially pay attention | any ideas that really grab your imagination. Especially pay attention | ||

| Line 64: | Line 83: | ||

So, without any further ado, welcome to one hell of a new hobby. | So, without any further ado, welcome to one hell of a new hobby. | ||

<center><div style="background-color:#DDDDFF;border-color:#CCCCDD;border-style:solid;width:80%;padding:0 1em 1em 1em;text-align:left;"> | |||

'''Going deeper…''' | |||

*[http://www.xiph.org/about/ About Xiph.Org]: Why you should care about open media | |||

*[http://www.0xdeadbeef.com/weblog/2010/01/html5-video-and-h-264-what-history-tells-us-and-why-were-standing-with-the-web/ HTML5 Video and H.264: what history tells us and why we're standing with the web]: Chris Blizzard of Mozilla on free formats and the open web | |||

*[http://diveintohtml5.org/video.html Dive into HTML5]: tutorial on HTML5 web video | |||

*[http://webchat.freenode.net/?channels=xiph Chat with the creators of the video] via freenode IRC in #xiph. | |||

</div></center> | |||

<br style="clear:both;"/> | <br style="clear:both;"/> | ||

==Analog vs Digital== | ==Analog vs Digital== | ||

[[Image:Dmpfg_004.jpg| | [[Image:Dmpfg_004.jpg|360px|right]] | ||

[[Image:Dmpfg_006.jpg|360px|right]] | |||

[[Image:Dmpfg_007.jpg|360px|right]] | |||

Sound is the propagation of pressure waves through air, spreading out | <small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Analog_vs_Digital|Discuss this section]]</small> | ||

[[Wikipedia:Sound|Sound]] is the propagation of pressure waves through air, spreading out | |||

from a source like ripples spread from a stone tossed into a pond. A | from a source like ripples spread from a stone tossed into a pond. A | ||

microphone, or the human ear for that matter, transforms these passing | microphone, or the human ear for that matter, transforms these passing | ||

| Line 75: | Line 108: | ||

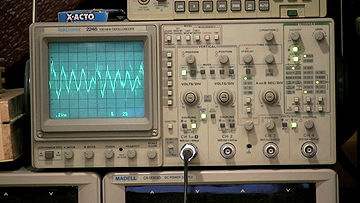

That audio signal is a one-dimensional function, a single value | That audio signal is a one-dimensional function, a single value | ||

varying over time. If we slow the 'scope down a bit... that should be | varying over time. If we slow the [[Wikipedia:Oscilloscope|'scope]] down a bit... that should be | ||

a little easier to see. A few other aspects of the signal are | a little easier to see. A few other aspects of the signal are | ||

important. It's continuous in both value and time; that is, at any | important. It's [[Wikipedia:Continuous_function|continuous]] in both value and time; that is, at any | ||

given time it can have any real value, and there's a smoothly varying | given time it can have any real value, and there's a smoothly varying | ||

value at every point | value at every point in time. No matter how much we zoom in, there | ||

are no discontinuities, no singularities, no instantaneous steps or | are no discontinuities, no singularities, no instantaneous steps or | ||

points where the signal ceases to exist. It's defined | points where the signal ceases to exist. It's defined | ||

everywhere. Classic continuous math works very well on these signals. | everywhere. Classic continuous math works very well on these signals. | ||

A digital signal on the other hand is discrete in both value and time. | A digital signal on the other hand is [[Wikipedia:Discrete_math|discrete]] in both value and time. | ||

In the simplest and most common system, called Pulse Code Modulation, | In the simplest and most common system, called [[Wikipedia:Pulse code modulation|Pulse Code Modulation]], | ||

one of a fixed number of possible values directly represents the | one of a fixed number of possible values directly represents the | ||

instantaneous signal amplitude at points in time spaced a fixed | instantaneous signal amplitude at points in time spaced a fixed | ||

distance apart. The end result is a stream of digits. | distance apart. The end result is a stream of digits. | ||

Now this looks an awful lot like this. It seems intuitive that we | Now this looks an awful lot like this. It seems intuitive that we | ||

should somehow be able to rigorously transform one into the other, and | should somehow be able to rigorously transform one into the other, and | ||

good news, the Sampling Theorem says we can and tells us | good news, the [[Wikipedia:Nyquist-Shannon sampling theorem|Sampling Theorem]] says we can and tells us | ||

how. Published in its most recognizable form by Claude Shannon in 1949 | how. Published in its most recognizable form by [[Wikipedia:Claude Shannon|Claude Shannon]] in 1949 | ||

and built on the work of Nyquist, and Hartley, and tons of others, the | and built on the work of [[Wikipedia:Harry Nyquist|Nyquist]], and [[Wikipedia:Ralph Hartley|Hartley]], and tons of others, the | ||

sampling theorem states that not only can we go back and | sampling theorem states that not only can we go back and | ||

forth between analog and digital, but also lays | forth between analog and digital, but also lays | ||

down a set of conditions for which conversion is lossless and the two | down a set of conditions for which conversion is lossless and the two | ||

representations become equivalent and | representations become equivalent and interchangeable. When the | ||

lossless conditions aren't met, the sampling theorem tells us how and | lossless conditions aren't met, the sampling theorem tells us how and | ||

how much information is lost or corrupted. | how much information is lost or corrupted. | ||

| Line 107: | Line 139: | ||

audio comes from an originally analog source. You may also think that | audio comes from an originally analog source. You may also think that | ||

since computers are fairly recent, analog signal technology must have | since computers are fairly recent, analog signal technology must have | ||

come first. Nope. Digital is actually older. The telegraph predates | come first. Nope. Digital is actually older. The [[Wikipedia:Telegraph|telegraph]] predates | ||

the telephone by half a century and was already fully mechanically | the telephone by half a century and was already fully mechanically | ||

automated by the 1860s, sending coded, multiplexed digital signals | automated by the 1860s, sending coded, multiplexed digital signals | ||

long distances. You know... Tickertape. Harry Nyquist of Bell Labs was | long distances. You know... [[Wikipedia:Tickertape|tickertape]]. Harry Nyquist of [[Wikipedia:Bell_labs|Bell Labs]] was | ||

researching telegraph pulse transmission when he published his | researching telegraph pulse transmission when he published his | ||

description of what later became known as the Nyquist frequency, the | description of what later became known as the [[Wikipedia:Nyquist_frequency|Nyquist frequency]], the | ||

core concept of the sampling theorem. Now, it's true the telegraph | core concept of the sampling theorem. Now, it's true the telegraph | ||

was transmitting symbolic information, text, not a digitized analog | was transmitting symbolic information, text, not a digitized analog | ||

| Line 118: | Line 150: | ||

digital signal technology progressed rapidly and side-by-side. | digital signal technology progressed rapidly and side-by-side. | ||

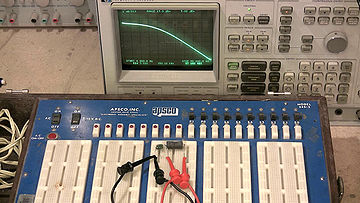

Audio had always been manipulated as an analog signal because... well, | Audio had always been manipulated as an analog signal because... well, | ||

gee it's so much easier. A second-order | gee, it's so much easier. A [[Wikipedia:Low-pass_filter#Continuous-time_low-pass_filters|second-order low-pass filter]], for example, | ||

requires two passive components. An all-analog short-time Fourier | requires two passive components. An all-analog [[Wikipedia:Short-time_Fourier_transform|short-time Fourier | ||

transform, a few hundred. Well, maybe a thousand if you want to build | transform]], a few hundred. Well, maybe a thousand if you want to build | ||

something really fancy | something really fancy (bang on the [http://www.testequipmentdepot.com/usedequipment/hewlettpackard/spectrumanalyzers/3585a.htm 3585]). Processing signals | ||

digitally requires millions to billions of transistors running at | digitally requires millions to billions of transistors running at | ||

microwave frequencies, support hardware at very least to digitize and | microwave frequencies, support hardware at very least to digitize and | ||

| Line 133: | Line 164: | ||

So we come to the conclusion that analog is the only practical way to | So we come to the conclusion that analog is the only practical way to | ||

do much with audio... well, unless you happen to have a billion | do much with audio... well, unless you happen to have a billion | ||

transistors and all the other things just lying around. And since we | transistors and all the other things just lying around. And [[Wikipedia:File:Transistor_Count_and_Moore's_Law_-_2008.svg|since we | ||

do, digital signal processing becomes very attractive. | do]], digital signal processing becomes very attractive. | ||

For one thing, analog componentry just doesn't have the flexibility of | For one thing, analog componentry just doesn't have the flexibility of | ||

a general purpose computer. Adding a new function to this | a general purpose computer. Adding a new function to this | ||

beast... yeah, it's probably not going to happen. On a digital | beast [the 3585]... yeah, it's probably not going to happen. On a digital | ||

processor though, just write a new program. Software isn't trivial, | processor though, just write a new program. Software isn't trivial, | ||

but it is | but it is a lot easier. | ||

Perhaps more importantly though every analog component is an | Perhaps more importantly though every analog component is an | ||

approximation. There's no such thing as a perfect transistor, or a | approximation. There's no such thing as a perfect transistor, or a | ||

perfect inductor, or a perfect capacitor. In analog, every component | perfect inductor, or a perfect capacitor. In analog, every component | ||

adds noise and distortion, usually not very much, but it adds up. Just | adds [[Wikipedia:Johnson–Nyquist_noise|noise]] and [[Wikipedia:Distortion#Electronic_signals|distortion]], usually not very much, but it adds up. Just | ||

transmitting an analog signal, especially over long distances, | transmitting an analog signal, especially over long distances, | ||

progressively, measurably, irretrievably corrupts it. Besides, all of | progressively, measurably, irretrievably corrupts it. Besides, all of | ||

those single-purpose analog components take up | those single-purpose analog components take up a lot of space. Two | ||

lines of code on the billion transistors back here can implement a | lines of code on the billion transistors back here can implement a | ||

filter that would require an inductor the size of a refrigerator. | filter that would require an [[Wikipedia:Inductor|inductor]] the size of a refrigerator. | ||

Digital systems don't have these drawbacks. Digital signals can be | Digital systems don't have these drawbacks. Digital signals can be | ||

stored, copied, manipulated and transmitted without adding any noise | stored, copied, manipulated, and transmitted without adding any noise | ||

or distortion. We do use lossy algorithms from time to time, but the | or distortion. We do use [[Wikipedia:Lossy_compression|lossy]] algorithms from time to time, but the | ||

only unavoidably non-ideal steps are digitization and reconstruction, | only unavoidably non-ideal steps are digitization and reconstruction, | ||

where digital has to interface with all of that messy analog. Messy | where digital has to interface with all of that messy analog. Messy | ||

or not, modern conversion stages are very, very good. By the | or not, modern [[Wikipedia:Digital-to-analog_converter|conversion stages]] are very, very good. By the | ||

standards of our ears, we can consider them practically | standards of our ears, we can consider them practically lossless as | ||

well. | well. | ||

| Line 165: | Line 196: | ||

is the clear winner over analog. So let us then go about storing it, | is the clear winner over analog. So let us then go about storing it, | ||

copying it, manipulating it, and transmitting it. | copying it, manipulating it, and transmitting it. | ||

<center><div style="background-color:#DDDDFF;border-color:#CCCCDD;border-style:solid;width:80%;padding:0 1em 1em 1em;text-align:left;"> | |||

'''Going deeper…''' | |||

*Wikipedia: [[Wikipedia:Nyquist–Shannon_sampling_theorem|Nyquist–Shannon sampling theorem]] | |||

*MIT OpenCourseWare [http://ocw.mit.edu/courses/electrical-engineering-and-computer-science/6-003-signals-and-systems-spring-2010/lecture-notes/ Lecture notes from 6.003 signals and systems.] | |||

*Wikipedia: [[Wikipedia:Passive_analogue_filter_development|The history of analog filters]] such as the [[Wikipedia:RC circuit|RC low-pass]] shown connected to the [[Wikipedia:Spectrum_analyzer|spectrum analyzer]] in the video. | |||

</div></center> | |||

<br style="clear:both;"/> | <br style="clear:both;"/> | ||

==Raw (Digital Audio) Meat== | |||

<small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Raw_.28digital_audio.29_meat|Discuss this section]]</small> | |||

[[Wikipedia:Pcm|Pulse Code Modulation]] is the most common representation for raw audio. | |||

Other practical representations do exist: for example, | |||

the [[Wikipedia:Delta-sigma_modulation|Sigma-Delta coding]] used by the [[Wikipedia:Super_Audio_CD|SACD]], | |||

which is a form of [[Wikipedia:Pulse-density_modulation|Pulse Density Modulation]]. | |||

That said, Pulse Code Modulation is far and away dominant, mainly because it's so mathematically convenient. | |||

An audio engineer can spend an entire career without running into anything else. | |||

PCM encoding can be characterized in three parameters, making it easy to account for every possible PCM variant with mercifully little hassle. | |||

encoding can | |||

Analog telephone systems traditionally band-limited voice channels to | ===Sample Rate=== | ||

<small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Raw_.28digital_audio.29_meat|Discuss this section]]</small> | |||

[[Image:Dmpfg_009.jpg|360px|right]] | |||

[[Image:Dmpfg_008.jpg|360px|right]] | |||

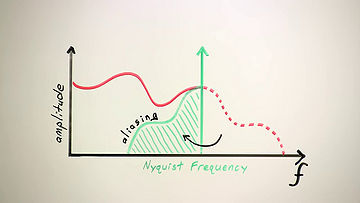

The first parameter is the [[Wikipedia:Sampling_rate|sampling rate]]. | |||

The highest frequency an encoding can represent is called the [[Wikipedia:Nyquist_Frequency|Nyquist Frequency]]. | |||

The Nyquist frequency of PCM happens to be exactly half the sampling rate. | |||

Therefore, the sampling rate directly determines the highest possible frequency in the digitized signal. | |||

Analog telephone systems traditionally [[Wikipedia:Bandlimiting|band-limited]] voice channels to | |||

just under 4kHz, so digital telephony and most classic voice | just under 4kHz, so digital telephony and most classic voice | ||

applications use an 8kHz sampling rate | applications use an 8kHz sampling rate: the minimum sampling rate | ||

necessary to capture the entire bandwidth of a 4kHz channel. This is | necessary to capture the entire bandwidth of a 4kHz channel. This is | ||

what an 8kHz sampling rate sounds like | what an 8kHz sampling rate sounds like—a bit muffled but perfectly | ||

intelligible for voice. This is the lowest sampling rate that's ever | intelligible for voice. This is the lowest sampling rate that's ever | ||

been used widely in practice. | been used widely in practice. | ||

| Line 220: | Line 261: | ||

reason. | reason. | ||

Stepping back for just a second, the French mathematician [[Wikipedia:Joseph_Fourier|Jean | |||

Stepping back for just a second, the French mathematician Jean | Baptiste Joseph Fourier]] showed that we can also think of signals like | ||

Baptiste Joseph Fourier showed that we can also think of signals like | audio as a set of component frequencies. This [[Wikipedia:Frequency_domain|frequency-domain]] | ||

audio as a set of component frequencies. This frequency domain | |||

representation is equivalent to the time representation; the signal is | representation is equivalent to the time representation; the signal is | ||

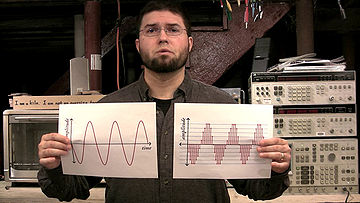

exactly the same, we're just looking at it a different way. Here we see the | exactly the same, we're just looking at it [[Wikipedia:Basis_(linear_algebra)|a different way]]. Here we see the | ||

frequency domain representation of a hypothetical analog signal we | frequency-domain representation of a hypothetical analog signal we | ||

intend to digitally sample. | intend to digitally sample. | ||

| Line 232: | Line 272: | ||

process. First, that a digital signal can't represent any | process. First, that a digital signal can't represent any | ||

frequencies above the Nyquist frequency. Second, and this is the new | frequencies above the Nyquist frequency. Second, and this is the new | ||

part, if we don't remove those frequencies with a | part, if we don't remove those frequencies with a low-pass [[Wikipedia:Audio_filter|filter]] | ||

before sampling, the sampling process will fold them down into the | before sampling, the sampling process will fold them down into the | ||

representable frequency range as aliasing distortion. | representable frequency range as [[Wikipedia:Aliasing|aliasing distortion]]. | ||

Aliasing, in a nutshell, sounds freakin' awful, so it's essential to | Aliasing, in a nutshell, sounds freakin' awful, so it's essential to remove any beyond-Nyquist frequencies ''before sampling'' and ''after reconstruction''. | ||

remove any beyond-Nyquist frequencies before sampling and after | |||

reconstruction. | |||

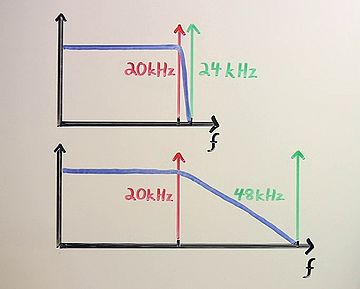

Human frequency perception is considered to extend to about 20kHz. In | Human frequency perception is considered to extend to about 20kHz. In | ||

44.1 or 48kHz sampling, the | 44.1 or 48kHz sampling, the low pass before the sampling stage has to | ||

be extremely sharp to avoid cutting any audible frequencies below | be extremely sharp to avoid cutting any audible frequencies below | ||

20kHz but still not allow frequencies above the Nyquist to leak | [[Wikipedia:Hearing_range|20kHz]] but still not allow frequencies above the Nyquist to leak | ||

forward into the sampling process. This is a difficult filter to | forward into the sampling process. This is a difficult filter to | ||

build and no practical filter succeeds completely. If the sampling | build, and no practical filter succeeds completely. If the sampling | ||

rate is 96kHz or 192kHz on the other hand, the | rate is 96kHz or 192kHz on the other hand, the low pass has an extra | ||

octave or two for its transition band. This is a much easier filter to | [[Wikipedia:Octave_(electronics)|octave]] or two for its [[Wikipedia:Transition_band|transition band]]. This is a much easier filter to | ||

build. Sampling rates beyond 48kHz are actually one of those messy | build. Sampling rates beyond 48kHz are actually one of those messy | ||

analog stage compromises. | analog stage compromises. | ||

<br style="clear:both;"/> | |||

=== | ===Sample Format=== | ||

<small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Raw_.28digital_audio.29_meat|Discuss this section]]</small> | |||

[[Image:Dmpfg_anim.gif|right]] | |||

The second fundamental PCM parameter is the sample format | The second fundamental PCM parameter is the sample format; that is, | ||

the format of each digital number. A number is a number, but a number | the format of each digital number. A number is a number, but a number | ||

can be represented in bits a number of different ways. | can be represented in bits a number of different ways. | ||

Early PCM was eight bit linear, encoded as an unsigned byte. | Early PCM was [[Wikipedia:Quantization_(sound_processing)#Audio_quantization|eight-bit]] [[Wikipedia:Linear_pulse_code_modulation|linear]], encoded as an [[Wikipedia:Signedness|unsigned]] [[Wikipedia:Integer_(computer_science)#Bytes_and_octets|byte]]. | ||

dynamic range is limited to about 50dB and the quantization noise, as | The [[Wikipedia:Dynamic_range#Audio|dynamic range]] is limited to about [[Wikipedia:Decibel|50dB]] | ||

you can hear, is pretty severe. | and the [[Wikipedia:Quantization_error|quantization noise]], as you can hear, is pretty severe. | ||

today. | Eight-bit audio is vanishingly rare today. | ||

Digital telephony typically uses one of two related non-linear eight | Digital telephony typically uses one of two related non-linear eight bit encodings called [[Wikipedia:A-law_algorithm|A-law]] and [[Wikipedia:Μ-law_algorithm|μ-law]]. | ||

bit encodings called A-law and | These formats encode a roughly [[Wikipedia:Audio_bit_depth#Dynamic_range|14 bit dynamic range]] into eight bits by spacing the higher amplitude values farther apart. | ||

14 bit dynamic range into eight bits by spacing the higher amplitude | A-law and mu-law obviously improve quantization noise compared to linear 8-bit, and voice harmonics especially hide the remaining quantization noise well. | ||

values farther apart. A-law and mu-law obviously improve quantization | All three eight-bit encodings (linear, A-law, and mu-law) are typically paired with an 8kHz sampling rate, though I'm demonstrating them here at 48kHz. | ||

noise compared to linear 8-bit, and voice harmonics especially hide | |||

the remaining quantization noise well. All three eight bit encodings | |||

linear, A-law, and mu-law | |||

rate, though I'm demonstrating them here at 48kHz. | |||

Most modern PCM uses 16 or 24 bit two's-complement signed integers to | Most modern PCM uses 16- or 24-bit [[Wikipedia:Two's_complement|two's-complement]] signed integers to | ||

encode the range from negative infinity to zero decibels in 16 or 24 | encode the range from negative infinity to zero decibels in 16 or 24 | ||

bits of precision. The maximum absolute value corresponds to zero decibels. | bits of precision. The maximum absolute value corresponds to zero decibels. | ||

As with all the sample formats so far, signals beyond zero decibels and thus | As with all the sample formats so far, signals beyond zero decibels, and thus | ||

beyond the maximum representable range are clipped. | beyond the maximum representable range, are [[Wikipedia:Clipping_(audio)|clipped]]. | ||

In mixing and mastering, it's not unusual to use floating point | In mixing and mastering, it's not unusual to use [[Wikipedia:Floating_point|floating-point]] | ||

numbers for PCM instead of integers. A 32 bit IEEE754 float, that's | numbers for PCM instead of [[Wikipedia:Integer_(computer_science)|integers]]. A 32 bit [[Wikipedia:IEEE_754-2008|IEEE754]] float, that's | ||

the normal kind of floating point you see on current computers, has 24 | the normal kind of floating point you see on current computers, has 24 | ||

bits of resolution, but a seven bit floating point exponent increases | bits of resolution, but a seven bit floating-point exponent increases | ||

the representable range. Floating point usually represents zero | the representable range. Floating point usually represents zero | ||

decibels as +/-1.0, and because floats can obviously represent | decibels as +/-1.0, and because floats can obviously represent | ||

considerably beyond that, temporarily exceeding zero decibels during | considerably beyond that, temporarily exceeding zero decibels during | ||

the mixing process doesn't cause clipping. Floating point PCM takes | the mixing process doesn't cause clipping. Floating-point PCM takes | ||

up more space, so it tends to be used only as an intermediate | up more space, so it tends to be used only as an intermediate | ||

production format. | production format. | ||

| Line 290: | Line 327: | ||

Lastly, most general purpose computers still read and | Lastly, most general purpose computers still read and | ||

write data in octet bytes, so it's important to remember that samples | write data in octet bytes, so it's important to remember that samples | ||

bigger than eight bits can be in big or little endian order, and both | bigger than eight bits can be in [[Wikipedia:Endianness|big- or little-endian order]], and both | ||

endiannesses are common. For example, Microsoft WAV files are little endian, | endiannesses are common. For example, Microsoft [[Wikipedia:WAV|WAV]] files are little-endian, | ||

and Apple AIFC files tend to be big-endian. Be aware of it. | and Apple [[Wikipedia:AIFC|AIFC]] files tend to be big-endian. Be aware of it. | ||

===Channels=== | |||

<small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Raw_.28digital_audio.29_meat|Discuss this section]]</small> | |||

The third PCM parameter is the number of [[Wikipedia:Multichannel_audio|channels]]. | |||

The convention in raw PCM is to encode multiple channels by interleaving the samples of each channel together into a single stream. | |||

Straightforward and extensible. | |||

===Done!=== | |||

And that's it! That describes every PCM representation ever. Done. | And that's it! That describes every PCM representation ever. Done. | ||

Digital audio is | Digital audio is ''so easy''! There's more to do of course, but at this | ||

point we've got a nice useful chunk of audio data, so let's get some | point we've got a nice useful chunk of audio data, so let's get some | ||

video too. | video too. | ||

< | <center><div style="background-color:#DDDDFF;border-color:#CCCCDD;border-style:solid;width:80%;padding:0 1em 1em 1em;text-align:left;"> | ||

==Video | '''Going deeper…''' | ||

* [[Wikipedia:Roll-off|Wikipedia's article on filter roll-off]], to learn why it's hard to build analog filters with a very narrow [[Wikipedia:Transition_band|transition band]] between the [[Wikipedia:Passband|passband]] and the [[Wikipedia:Stopband|stopband]]. Filters that achieve such hard edges often do so at the expense of increased [[Wikipedia:Ripple_(filters)#Frequency-domain_ripple|ripple]] and [http://www.ocf.berkeley.edu/~ashon/audio/phase/phaseaud2.htm phase distortion]. | |||

* [http://wiki.multimedia.cx/index.php?title=PCM Some more minutiae] about PCM in practice. | |||

* [[Wikipedia:DPCM|DPCM]] and [[Wikipedia:ADPCM|ADPCM]], simple audio codecs loosely inspired by PCM. | |||

</div></center> | |||

==Video Vegetables (they're good for you!)== | |||

[[Image:Dmpfg_010.jpg|360px|right]] | |||

<small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Video_vegetables_.28they.27re_good_for_you.21.29|Discuss this section]]</small> | |||

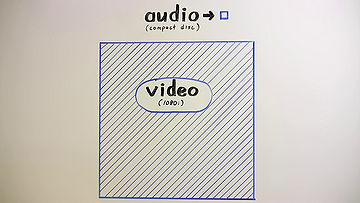

One could think of video as being like audio but with two additional | One could think of video as being like audio but with two additional | ||

| Line 317: | Line 363: | ||

Audio and video are obviously quite different in practice. For one, | Audio and video are obviously quite different in practice. For one, | ||

compared to audio, video is huge. Raw CD audio is about 1.4 megabits | compared to audio, video is huge. [[Wikipedia:Red_Book_(audio_Compact_Disc_standard)#Technical_details|Raw CD audio]] is about 1.4 megabits | ||

per second. Raw 1080i HD video is over 700 megabits per second. That's | per second. Raw [[Wikipedia:1080i|1080i]] HD video is over 700 megabits per second. That's | ||

more than 500 times more data to capture, process and store per | more than 500 times more data to capture, process, and store per | ||

second. By Moore's law... that's... let's see... roughly eight | second. By [[Wikipedia:Moore's_law|Moore's law]]... that's... let's see... roughly eight | ||

doublings times two years, so yeah, computers requiring about an extra | doublings times two years, so yeah, computers requiring about an extra | ||

fifteen years to handle raw video after getting raw audio down pat was | fifteen years to handle raw video after getting raw audio down pat was | ||

| Line 331: | Line 377: | ||

standards committees that govern broadcast video have always been very | standards committees that govern broadcast video have always been very | ||

concerned with backward compatibility. Up until just last year in the | concerned with backward compatibility. Up until just last year in the | ||

US, a sixty year old black and white television could still show a | US, a sixty-year-old black and white television could still show a | ||

normal analog television broadcast. That's actually a really neat | normal [[Wikipedia:NTSC|analog television broadcast]]. That's actually a really neat | ||

trick. | trick. | ||

| Line 348: | Line 394: | ||

all completely here, so we'll cover the broad fundamentals. | all completely here, so we'll cover the broad fundamentals. | ||

=== | ===Resolution and Aspect=== | ||

[[Image:Dmpfg_011.jpg|360px|right]] | |||

<small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Video_vegetables_.28they.27re_good_for_you.21.29|Discuss this section]]</small> | |||

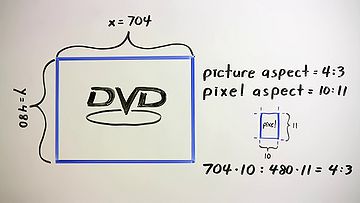

The most obvious raw video parameters are the width and height of the | The most obvious raw video parameters are the width and height of the | ||

| Line 354: | Line 403: | ||

alone don't actually specify the absolute width and height of the | alone don't actually specify the absolute width and height of the | ||

picture, as most broadcast-derived video doesn't use square pixels. | picture, as most broadcast-derived video doesn't use square pixels. | ||

The number of scanlines in a broadcast image was fixed, but the | The number of [[Wikipedia:Scan_line|scanlines]] in a broadcast image was fixed, but the | ||

effective number of horizontal pixels was a function of channel | effective number of horizontal pixels was a function of channel | ||

bandwidth. Effective horizontal resolution could result in pixels that | [[Wikipedia:Bandwidth_(signal_processing)|bandwidth]]. Effective horizontal resolution could result in pixels that | ||

were either narrower or wider than the spacing between scanlines. | were either narrower or wider than the spacing between scanlines. | ||

| Line 363: | Line 412: | ||

amount of digital video also uses non-square pixels. For example, a | amount of digital video also uses non-square pixels. For example, a | ||

normal 4:3 aspect NTSC DVD is typically encoded with a display | normal 4:3 aspect NTSC DVD is typically encoded with a display | ||

resolution of 704 by 480, a ratio wider than 4:3. In this case, the | resolution of [[Wikipedia:DVD-Video#Frame_size_and_frame_rate|704 by 480]], a ratio wider than 4:3. In this case, the | ||

pixels themselves are assigned an aspect ratio of 10:11, making them | pixels themselves are assigned an aspect ratio of [[Wikipedia:Standard-definition_television#Resolution|10:11]], making them | ||

taller than they are wide and narrowing the image horizontally to the | taller than they are wide and narrowing the image horizontally to the | ||

correct aspect. Such an image has to be resampled to show properly on | correct aspect. Such an image has to be resampled to show properly on | ||

a digital display with square pixels. | a digital display with square pixels. | ||

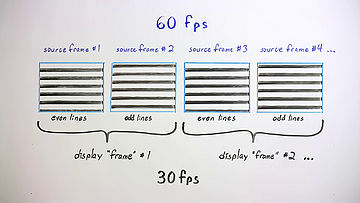

=== | ===Frame Rate and Interlacing=== | ||

[[Image:Dmpfg_012.jpg|360px|right]] | |||

The second obvious video parameter is the frame rate, the number of | <small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Video_vegetables_.28they.27re_good_for_you.21.29|Discuss this section]]</small> | ||

The second obvious video parameter is the [[Wikipedia:Frame_rate|frame rate]], the number of | |||

full frames per second. Several standard frame rates are in active | full frames per second. Several standard frame rates are in active | ||

use. Digital video, in one form or another, can use all of them. Or, | use. Digital video, in one form or another, can use all of them. Or, | ||

| Line 377: | Line 429: | ||

changes adaptively over the course of the video. The higher the frame | changes adaptively over the course of the video. The higher the frame | ||

rate, the smoother the motion and that brings us, unfortunately, to | rate, the smoother the motion and that brings us, unfortunately, to | ||

interlacing. | [[Wikipedia:Interlace|interlacing]]. | ||

In the very earliest days of broadcast video, engineers sought the | In the very earliest days of broadcast video, engineers sought the | ||

fastest practical | fastest practical frame rate to smooth motion and to minimize [[Wikipedia:Flicker_(screen)|flicker]] | ||

on phosphor-based CRTs. They were also under pressure to use the | on phosphor-based [[Wikipedia:Cathode_ray_tube|CRTs]]. They were also under pressure to use the | ||

least possible bandwidth for the highest resolution and fastest frame | least possible bandwidth for the highest resolution and fastest frame | ||

rate. Their solution was to interlace the video where the even lines | rate. Their solution was to interlace the video where the even lines | ||

| Line 390: | Line 442: | ||

rate is actually 60 full frames per second, and half of each frame, | rate is actually 60 full frames per second, and half of each frame, | ||

every other line, is simply discarded. This is why we can't | every other line, is simply discarded. This is why we can't | ||

deinterlace a video simply by combining two fields into one frame; | [[Wikipedia:Deinterlacing|deinterlace]] a video simply by combining two fields into one frame; | ||

they're not actually from one frame to begin with. | they're not actually from one frame to begin with. | ||

<br style="clear:both;"/> | |||

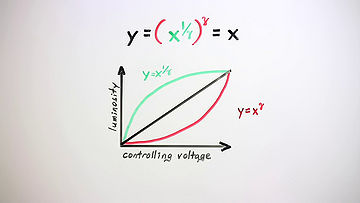

===Gamma and its Correction=== | |||

[[Image:Dmpfg_013.jpg|360px|right]] | |||

<small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Video_vegetables_.28they.27re_good_for_you.21.29|Discuss this section]]</small> | |||

The cathode ray tube was the only available display technology for | The cathode ray tube was the only available display technology for | ||

| Line 406: | Line 462: | ||

fantastically expensive anyway, and hopefully many, many television | fantastically expensive anyway, and hopefully many, many television | ||

sets which best be as inexpensive as possible, engineers decided to | sets which best be as inexpensive as possible, engineers decided to | ||

add the necessary gamma correction circuitry to the cameras rather | add the necessary [[Wikipedia:Gamma_correction|gamma correction]] circuitry to the cameras rather | ||

than the sets. Video transmitted over the airwaves would thus have a | than the sets. Video transmitted over the airwaves would thus have a | ||

nonlinear intensity using the inverse of the set's gamma exponent, so that | nonlinear intensity using the inverse of the set's gamma exponent, so that | ||

| Line 426: | Line 482: | ||

its finest intensity discrimination, and therefore uses the available | its finest intensity discrimination, and therefore uses the available | ||

scale resolution more efficiently. Although CRTs are currently | scale resolution more efficiently. Although CRTs are currently | ||

vanishing, a standard sRGB computer display still uses a nonlinear | vanishing, a standard [[Wikipedia:sRGB|sRGB]] computer display still uses a nonlinear | ||

intensity curve similar to television, with a linear ramp near black, | intensity curve similar to television, with a linear ramp near black, | ||

followed by an exponential curve with a gamma exponent of 2.4. This | followed by an exponential curve with a gamma exponent of 2.4. This | ||

encodes a sixteen bit linear range down into eight bits. | encodes a sixteen bit linear range down into eight bits. | ||

=== | ===Color and Colorspace=== | ||

[[Image:Dmpfg_014.jpg|360px|right]] | |||

<small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Video_vegetables_.28they.27re_good_for_you.21.29|Discuss this section]]</small> | |||

The human eye has three apparent color channels, red, green, and blue, | The human eye has three apparent color channels, red, green, and blue, | ||

and most displays use these three colors as additive primaries to | and most displays use these three colors as [[Wikipedia:Additive_color|additive primaries]] to | ||

produce a full range of color output. The primary pigments in | produce a full range of color output. The primary pigments in | ||

printing are Cyan, Magenta, and Yellow for the same reason; pigments | printing are [[Wikipedia:CMYK|Cyan, Magenta, and Yellow]] for the same reason; pigments | ||

are subtractive, and each of these pigments subtracts one pure color | are [[Wikipedia:Subtractive_color|subtractive]], and each of these pigments subtracts one pure color | ||

from reflected light. Cyan subtracts red, magenta subtracts green, and | from reflected light. Cyan subtracts red, magenta subtracts green, and | ||

yellow subtracts blue. | yellow subtracts blue. | ||

Video can be and sometimes is represented with red, green, and blue | Video can be, and sometimes is, represented with red, green, and blue | ||

color channels, but RGB video is atypical. The human eye is far more | color channels, but RGB video is atypical. The human eye is far more | ||

sensitive to luminosity than it is the color, and RGB tends to spread | sensitive to [[Wikipedia:Luminance_(relative)|luminosity]] than it is the color, and RGB tends to spread | ||

the energy of an image across all three color channels. That is, the | the energy of an image across all three color channels. That is, the | ||

red plane looks like a red version of the original picture, the green | red plane looks like a red version of the original picture, the green | ||

| Line 452: | Line 511: | ||

For those reasons and because, oh hey, television just happened to | For those reasons and because, oh hey, television just happened to | ||

start out as black and white anyway, video usually is represented as a | start out as black and white anyway, video usually is represented as a | ||

high resolution luma channel | high resolution [[Wikipedia:Luma_(video)|luma channel]]—the black & white—along with | ||

additional, often lower resolution chroma channels, the color. The | additional, often lower resolution [[Wikipedia:Chrominance|chroma channels]], the color. The | ||

luma channel, Y, is produced by weighting and then adding the separate | luma channel, Y, is produced by weighting and then adding the separate | ||

red, green and blue signals. The chroma channels U and V are then | red, green and blue signals. The chroma channels U and V are then | ||

| Line 459: | Line 518: | ||

from red. | from red. | ||

When YUV is scaled, offset and quantized for digital video, it's | When YUV is scaled, offset, and quantized for digital video, it's | ||

usually more correctly called Y'CbCr, but the more generic term YUV is | usually more correctly called [[Wikipedia:Y'CbCr|Y'CbCr]], but the more generic term YUV is | ||

widely used to | widely used to describe all the analog and digital variants of this | ||

color model. | color model. | ||

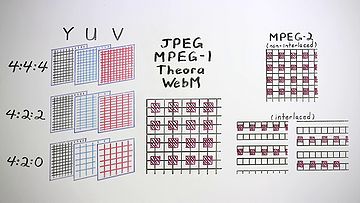

=== | ===Chroma Subsampling=== | ||

[[Image:Dmpfg_015.jpg|360px|right]] | |||

<small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Video_vegetables_.28they.27re_good_for_you.21.29|Discuss this section]]</small> | |||

The U and V chroma channels can have the same resolution as the Y | The U and V chroma channels can have the same resolution as the Y | ||

channel, but because the human eye has far less spatial color | channel, but because the human eye has far less spatial color | ||

resolution than spatial luminosity resolution, chroma resolution is | resolution than spatial luminosity resolution, chroma resolution is | ||

usually halved or even quartered in the horizontal direction, the | usually [[Wikipedia:Chroma_subsampling|halved or even quartered]] in the horizontal direction, the | ||

vertical direction, or both, usually without any significant impact on the | vertical direction, or both, usually without any significant impact on the | ||

apparent raw image quality. Practically every possible subsampling | apparent raw image quality. Practically every possible subsampling | ||

variant has been used at one time or another, but the common choices | variant has been used at one time or another, but the common choices | ||

today are 4:4:4 video, which isn't actually subsampled at all, 4:2:2 video in | today are [[Wikipedia:Chroma_subsampling#4:4:4_Y.27CbCr|4:4:4]] video, which isn't actually subsampled at all, [[Wikipedia:Chroma_subsampling#4:2:2|4:2:2]] video in | ||

which the horizontal resolution of the U and V channels is halved, and | which the horizontal resolution of the U and V channels is halved, and | ||

most common of all, 4:2:0 video in which both the | most common of all, [[Wikipedia:Chroma_subsampling#4:2:0|4:2:0]] video in which both the horizontal and vertical | ||

resolutions of the chroma channels are halved, resulting in U and V | resolutions of the chroma channels are halved, resulting in U and V | ||

planes that are each one quarter the size of Y. | planes that are each one quarter the size of Y. | ||

The terms 4:2:2, 4:2:0, 4:1:1 and so on and so forth, aren't complete | The terms 4:2:2, 4:2:0, [[Wikipedia:Chroma_subsampling#4:1:1|4:1:1]], and so on and so forth, aren't complete | ||

descriptions of a chroma subsampling. There's multiple possible ways | descriptions of a chroma subsampling. There's multiple possible ways | ||

to position the chroma pixels relative to luma, and again, several | to position the chroma pixels relative to luma, and again, several | ||

variants are in active use for each subsampling. For example, motion | variants are in active use for each subsampling. For example, [[Wikipedia:Motion_Jpeg|motion | ||

JPEG, MPEG-1 video, MPEG-2 video, DV, Theora and WebM all use or can | JPEG]], [[Wikipedia:MPEG-1#Part_2:_Video|MPEG-1 video]], [[Wikipedia:MPEG-2#Video_coding_.28simplified.29|MPEG-2 video]], [[Wikipedia:DV#DV_Compression|DV]], [[Wikipedia:Theora|Theora]], and [[Wikipedia:WebM|WebM]] all use or can | ||

use 4:2:0 subsampling, but they site the chroma pixels three different | use 4:2:0 subsampling, but they site the chroma pixels [http://www.mir.com/DMG/chroma.html three different ways]. | ||

ways. | |||

Motion JPEG, | Motion JPEG, MPEG-1 video, Theora and WebM all site chroma pixels | ||

between luma pixels both horizontally and vertically. | between luma pixels both horizontally and vertically. | ||

MPEG-2 sites chroma pixels between lines, but horizontally aligned with | |||

every other luma pixel. Interlaced modes complicate things somewhat, | every other luma pixel. Interlaced modes complicate things somewhat, | ||

resulting in a siting arrangement that's a tad | resulting in a siting arrangement that's a tad bizarre. | ||

And finally PAL-DV, which is always interlaced, places the chroma | And finally PAL-DV, which is always interlaced, places the chroma | ||

pixels in the same position as every other luma pixel in the | pixels in the same position as every other luma pixel in the | ||

horizontal direction, and vertically alternates chroma channel on | |||

each line. | each line. | ||

| Line 502: | Line 563: | ||

viewer. Got the basic idea, moving on. | viewer. Got the basic idea, moving on. | ||

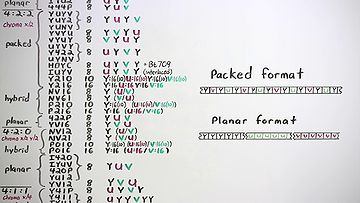

=== | ===Pixel Formats=== | ||

[[Image:Dmpfg_016.jpg|360px|right]] | |||

<small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Video_vegetables_.28they.27re_good_for_you.21.29|Discuss this section]]</small> | |||

In audio, we always represent multiple channels in a PCM stream by | In audio, we always represent multiple channels in a PCM stream by | ||

| Line 508: | Line 572: | ||

packed formats that interleave the color channels, as well as planar | packed formats that interleave the color channels, as well as planar | ||

formats that keep the pixels from each channel together in separate | formats that keep the pixels from each channel together in separate | ||

planes stacked in order in the frame. There are at least 50 different formats in | planes stacked in order in the frame. There are at least [http://www.fourcc.org/yuv.php 50 different formats] in | ||

these two broad categories with possibly ten or fifteen in common use. | these two broad categories with possibly ten or fifteen in common use. | ||

| Line 514: | Line 578: | ||

packing arrangement, and so a different pixel format. For a given | packing arrangement, and so a different pixel format. For a given | ||

unique subsampling, there are usually also several equivalent formats | unique subsampling, there are usually also several equivalent formats | ||

that consist of trivial channel order rearrangements or repackings due either to | that consist of trivial channel order rearrangements or repackings, due either to | ||

convenience once-upon-a-time on some particular piece of hardware or | convenience once-upon-a-time on some particular piece of hardware, or | ||

sometimes just good old-fashioned spite. | sometimes just good old-fashioned spite. | ||

Pixels formats are described by a unique name or fourcc code. There | Pixels formats are described by a unique name or [[Wikipedia:FourCC|fourcc]] code. There | ||

are quite a few of these and there's no sense going over each one now. | are quite a few of these and there's no sense going over each one now. | ||

Google is your friend. Be aware that fourcc codes for raw video | Google is your friend. Be aware that fourcc codes for raw video | ||

specify the pixel arrangement and chroma subsampling, but generally | specify the pixel arrangement and chroma subsampling, but generally | ||

don't imply anything certain about chroma siting or color space. YV12 | don't imply anything certain about chroma siting or color space. [http://www.fourcc.org/yuv.php#YV12 YV12] | ||

video to pick one, can use JPEG, MPEG-2 or DV chroma siting, and any | video to pick one, can use JPEG, MPEG-2 or DV chroma siting, and any | ||

one of several YUV colorspace definitions. | one of [[Wikipedia:YUV#BT.709_and_BT.601|several YUV colorspace definitions]]. | ||

<br style="clear:both;"/> | |||

=== | ===Done!=== | ||

That wraps up our not so quick and yet very incomplete tour of raw | That wraps up our not-so-quick and yet very incomplete tour of raw | ||

video. The good news is we can already get quite | video. The good news is we can already get quite a lot of real work | ||

done using that overview. In plenty of situations, a frame of video | done using that overview. In plenty of situations, a frame of video | ||

data is a frame of video data. The details matter, greatly, when it | data is a frame of video data. The details matter, greatly, when it | ||

| Line 535: | Line 600: | ||

esteemed viewer is broadly aware of the relevant issues. | esteemed viewer is broadly aware of the relevant issues. | ||

< | <center><div style="background-color:#DDDDFF;border-color:#CCCCDD;border-style:solid;width:80%;padding:0 1em 1em 1em;text-align:left;"> | ||

'''Going deeper…''' | |||

* YCbCr is defined in terms of RGB by the ITU in two incompatible standards: [[Wikipedia:Rec. 601|Rec. 601]] and [[Wikipedia:Rec. 709|Rec. 709]]. Both conversion standards are lossy, which has prompted some to adopt a lossless alternative called [http://wiki.multimedia.cx/index.php?title=YCoCg YCoCg]. | |||

* Learn about [[Wikipedia:High_dynamic_range_imaging|high dynamic range imaging]], which achieves better representation of the full range of brightnesses in the real world by using more than 8 bits per channel. | |||

* Learn about how [[Wikipedia:Trichromatic_vision|trichromatic color vision]] works in humans, and how human color perception is encoded in the [[Wikipedia:CIE 1931 color space|CIE 1931 XYZ color space]]. | |||

** Compare with the [[Wikipedia:Lab_color_space|Lab color space]], mathematically equivalent but structured to account for "perceptual uniformity". | |||

** If we were all [[Wikipedia:Dichromacy|dichromats]] then video would only need two color channels. Some humans might be [[Wikipedia:Tetrachromacy#Possibility_of_human_tetrachromats|tetrachromats]], in which case they would need an additional color channel for video to fully represent their vision. | |||

** [http://www.xritephoto.com/ph_toolframe.aspx?action=coloriq Test your color vision] (or at least your monitor). | |||

</div></center> | |||

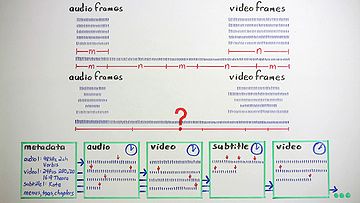

==Containers== | ==Containers== | ||

[[Image:Dmpfg_017.jpg|360px|right]] | |||

<small>[[Talk:A_Digital_Media_Primer_For_Geeks_(episode_1)#Containers|Discuss this section]]</small> | |||

So. We have audio data. We have video data. What remains is the more | So. We have audio data. We have video data. What remains is the more | ||

familiar non-signal data and straight up engineering that software | familiar non-signal data and straight-up engineering that software | ||

developers are used to, and plenty of it. | developers are used to, and plenty of it. | ||

Chunks of raw audio and video data have no externally visible | Chunks of raw audio and video data have no externally-visible | ||

structure, but they're often uniformly sized. We could just string | structure, but they're often uniformly sized. We could just string | ||

them together in a rigid | them together in a rigid predetermined ordering for streaming and | ||

storage, and some simple systems do approximately that. Compressed | storage, and some simple systems do approximately that. Compressed | ||

frames though aren't necessarily a predictable size, and we usually want | frames, though, aren't necessarily a predictable size, and we usually want | ||

some flexibility in using a range of different data types in streams. | some flexibility in using a range of different data types in streams. | ||

If we string random formless data together, we lose the boundaries | If we string random formless data together, we lose the boundaries | ||

| Line 553: | Line 630: | ||

In addition to our signal data, we also have our PCM and video | In addition to our signal data, we also have our PCM and video | ||

parameters. There's probably plenty of other metadata we also want to | parameters. There's probably plenty of other [[Wikipedia:Metadata#Video|metadata]] we also want to | ||

deal with, like audio tags and video chapters and subtitles, all | deal with, like audio tags and video chapters and subtitles, all | ||

essential components of rich media. It makes sense to place this | essential components of rich media. It makes sense to place this | ||

metadata | metadata—that is, data about the data—within the media itself. | ||

Storing and structuring formless data and disparate metadata is the | Storing and structuring formless data and disparate metadata is the | ||

job of a container. Containers provide framing for the data blobs, | job of a [[Wikipedia:Container_format_(digital)|container]]. Containers provide framing for the data blobs, | ||

interleave and identify multiple data streams, provide timing | interleave and identify multiple data streams, provide timing | ||

information, and store the metadata necessary to parse, navigate, | information, and store the metadata necessary to parse, navigate, | ||

manipulate and present the media. In general, any container can hold | manipulate, and present the media. In general, any container can hold | ||

any kind of data. And data can be put into any container. | any kind of data. And data can be put into any container. | ||

<center><div style="background-color:#DDDDFF;border-color:#CCCCDD;border-style:solid;width:80%;padding:0 1em 1em 1em;text-align:left;"> | |||

'''Going deeper…''' | |||

* There are several common general-purpose container formats: [[Wikipedia:Audio_Video_Interleave|AVI]], [[Wikipedia:Matroska|Matroska]], [[Wikipedia:Ogg|Ogg]], [[Wikipedia:QuickTime_File_Format|QuickTime]], and [[Wikipedia:Comparison_of_container_formats|many others]]. These can contain and interleave many different types of media streams. | |||

* Some special-purpose containers have been designed that can only hold one format: | |||

** [http://wiki.multimedia.cx/index.php?title=YUV4MPEG2 The y4m format] is the most common single-purpose container for raw YUV video. It can also be stored in a general-purpose container, for example in Ogg using [[OggYUV]]. | |||

** MP3 files use a [[Wikipedia:MP3#File_structure|special single-purpose file format]]. | |||

** [[Wikipedia:WAV|WAV]] and [[Wikipedia:AIFC|AIFC]] are semi-single-purpose formats. They're audio-only, and typically contain raw PCM audio, but are occasionally used to store other kinds of audio data ... even MP3! | |||

</div></center> | |||

<br style="clear:both;"/> | |||

==Credits== | ==Credits== | ||

[[Image:Dmpfg_018.jpg|360px|right]] | |||

[[Image:Dmpfg_019.png|360px|right]] | |||

In the past thirty minutes, we've covered digital audio, video, some | In the past thirty minutes, we've covered digital audio, video, some | ||

history, some math and a little engineering. We've barely scratched the | history, some math and a little engineering. We've barely scratched the | ||

surface, but it's time for a well earned break. | surface, but it's time for a well-earned break. | ||

There's so much more to talk about, so I hope you'll join me again in | There's so much more to talk about, so I hope you'll join me again in | ||

our next episode. Until then | our next episode. Until then—Cheers! | ||

Written by: | Written by: | ||

| Line 578: | Line 669: | ||

and the Xiph.Org Community | and the Xiph.Org Community | ||

Intro, title and credits music: | Intro, title and credits music:<br> | ||

"Boo Boo Coming", by Joel Forrester | "Boo Boo Coming", by Joel Forrester<br> | ||

Performed by the Microscopic Septet | Performed by the [http://microscopicseptet.com/ Microscopic Septet]<br> | ||

Used by permission of Cuneiform Records. | Used by permission of Cuneiform Records.<br> | ||

Original source track All Rights Reserved. | Original source track All Rights Reserved.<br> | ||

www.cuneiformrecords.com | [http://www.cuneiformrecords.com www.cuneiformrecords.com] | ||

This Video Was Produced Entirely With Free and Open Source Software | This Video Was Produced Entirely With Free and Open Source Software:<br> | ||

GNU | [https://www.gnu.org/ GNU]<br> | ||

Linux | [http://www.linux.org/ Linux]<br> | ||

Fedora | [https://getfedora.org/ Fedora]<br> | ||

Cinelerra | [http://cinelerra.org/ Cinelerra]<br> | ||

The Gimp | [https://www.gimp.org/ The Gimp]<br> | ||

Audacity | [https://sourceforge.net/projects/audacity/ Audacity]<br> | ||

Postfish | [https://svn.xiph.org/trunk/postfish/README Postfish]<br> | ||

Gstreamer | [https://gstreamer.freedesktop.org/ Gstreamer]<br> | ||

All trademarks are the property of their respective owners. | |||

<br | ''Complete video'' [https://creativecommons.org/licenses/by-nc-sa/3.0/legalcode CC-BY-NC-SA]<br> | ||

''Text transcript and Wiki edition'' [https://creativecommons.org/licenses/by-sa/3.0/legalcode CC-BY-SA]<br> | |||

A Co-Production of [https://xiph.org/ Xiph.Org] and [https://www.redhat.com/ Red Hat Inc.]<br> | |||

(C) 2010, Some Rights Reserved | |||

<center><font size="+1">''[[/making|Learn more about the making of this video…]]''</font></center> | |||

Latest revision as of 10:08, 25 November 2016

Wiki edition

This first video from Xiph.Org presents the technical foundations of modern digital media via a half-hour firehose of information. One community member called it "a Uni lecture I never got but really wanted."

The program offers a brief history of digital media, a quick summary of the sampling theorem, and a myriad of details on low level audio and video characterization and formatting. It's intended for budding geeks looking to get into video coding, as well as the technically curious who want to know more about the media they wrangle for work or play.

See also: Episode 02: Digital Show and Tell

Players supporting WebM: VLC, Firefox, Chrome, Opera, more…

Players supporting Ogg/Theora: VLC, Firefox, Opera, more…

If you're having trouble with playback in a modern browser or player, please visit our playback troubleshooting and discussion page.

Introduction

Workstations and high-end personal computers have been able to manipulate digital audio pretty easily for about fifteen years now. It's only been about five years that a decent workstation's been able to handle raw video without a lot of expensive special purpose hardware.

But today even most cheap home PCs have the processor power and storage necessary to really toss raw video around, at least without too much of a struggle. So now that everyone has all of this cheap media-capable hardware, more people, not surprisingly, want to do interesting things with digital media, especially streaming. YouTube was the first huge success, and now everybody wants in.

Well good! Because this stuff is a lot of fun!

It's no problem finding consumers for digital media. But here I'd like to address the engineers, the mathematicians, the hackers, the people who are interested in discovering and making things and building the technology itself. The people after my own heart.

Digital media, compression especially, is perceived to be super-elite, somehow incredibly more difficult than anything else in computer science. The big industry players in the field don't mind this perception at all; it helps justify the staggering number of very basic patents they hold. They like the image that their media researchers "are the best of the best, so much smarter than anyone else that their brilliant ideas can't even be understood by mere mortals." This is bunk.

Digital audio and video and streaming and compression offer endless deep and stimulating mental challenges, just like any other discipline. It seems elite because so few people have been involved. So few people have been involved perhaps because so few people could afford the expensive, special-purpose equipment it required. But today, just about anyone watching this video has a cheap, general-purpose computer powerful enough to play with the big boys. There are battles going on today around HTML5 and browsers and video and open vs. closed. So now is a pretty good time to get involved. The easiest place to start is probably understanding the technology we have right now.

This is an introduction. Since it's an introduction, it glosses over a ton of details so that the big picture's a little easier to see. Quite a few people watching are going to be way past anything that I'm talking about, at least for now. On the other hand, I'm probably going to go too fast for folks who really are brand new to all of this, so if this is all new, relax. The important thing is to pick out any ideas that really grab your imagination. Especially pay attention to the terminology surrounding those ideas, because with those, and Google, and Wikipedia, you can dig as deep as interests you.

So, without any further ado, welcome to one hell of a new hobby.

Going deeper…

- About Xiph.Org: Why you should care about open media

- HTML5 Video and H.264: what history tells us and why we're standing with the web: Chris Blizzard of Mozilla on free formats and the open web

- Dive into HTML5: tutorial on HTML5 web video

- Chat with the creators of the video via freenode IRC in #xiph.

Analog vs Digital

Sound is the propagation of pressure waves through air, spreading out from a source like ripples spread from a stone tossed into a pond. A microphone, or the human ear for that matter, transforms these passing ripples of pressure into an electric signal. Right, this is middle school science class, everyone remembers this. Moving on.

That audio signal is a one-dimensional function, a single value varying over time. If we slow the 'scope down a bit... that should be a little easier to see. A few other aspects of the signal are important. It's continuous in both value and time; that is, at any given time it can have any real value, and there's a smoothly varying value at every point in time. No matter how much we zoom in, there are no discontinuities, no singularities, no instantaneous steps or points where the signal ceases to exist. It's defined everywhere. Classic continuous math works very well on these signals.

A digital signal on the other hand is discrete in both value and time. In the simplest and most common system, called Pulse Code Modulation, one of a fixed number of possible values directly represents the instantaneous signal amplitude at points in time spaced a fixed distance apart. The end result is a stream of digits.

Now this looks an awful lot like this. It seems intuitive that we should somehow be able to rigorously transform one into the other, and good news, the Sampling Theorem says we can and tells us how. Published in its most recognizable form by Claude Shannon in 1949 and built on the work of Nyquist, and Hartley, and tons of others, the sampling theorem states that not only can we go back and forth between analog and digital, but also lays down a set of conditions for which conversion is lossless and the two representations become equivalent and interchangeable. When the lossless conditions aren't met, the sampling theorem tells us how and how much information is lost or corrupted.

Up until very recently, analog technology was the basis for practically everything done with audio, and that's not because most audio comes from an originally analog source. You may also think that since computers are fairly recent, analog signal technology must have come first. Nope. Digital is actually older. The telegraph predates the telephone by half a century and was already fully mechanically automated by the 1860s, sending coded, multiplexed digital signals long distances. You know... tickertape. Harry Nyquist of Bell Labs was researching telegraph pulse transmission when he published his description of what later became known as the Nyquist frequency, the core concept of the sampling theorem. Now, it's true the telegraph was transmitting symbolic information, text, not a digitized analog signal, but with the advent of the telephone and radio, analog and digital signal technology progressed rapidly and side-by-side.

Audio had always been manipulated as an analog signal because... well, gee, it's so much easier. A second-order low-pass filter, for example, requires two passive components. An all-analog short-time Fourier transform, a few hundred. Well, maybe a thousand if you want to build something really fancy (bang on the 3585). Processing signals digitally requires millions to billions of transistors running at microwave frequencies, support hardware at very least to digitize and reconstruct the analog signals, a complete software ecosystem for programming and controlling that billion-transistor juggernaut, digital storage just in case you want to keep any of those bits for later...

So we come to the conclusion that analog is the only practical way to do much with audio... well, unless you happen to have a billion transistors and all the other things just lying around. And since we do, digital signal processing becomes very attractive.

For one thing, analog componentry just doesn't have the flexibility of a general purpose computer. Adding a new function to this beast [the 3585]... yeah, it's probably not going to happen. On a digital processor though, just write a new program. Software isn't trivial, but it is a lot easier.

Perhaps more importantly though every analog component is an approximation. There's no such thing as a perfect transistor, or a perfect inductor, or a perfect capacitor. In analog, every component adds noise and distortion, usually not very much, but it adds up. Just transmitting an analog signal, especially over long distances, progressively, measurably, irretrievably corrupts it. Besides, all of those single-purpose analog components take up a lot of space. Two lines of code on the billion transistors back here can implement a filter that would require an inductor the size of a refrigerator.

Digital systems don't have these drawbacks. Digital signals can be stored, copied, manipulated, and transmitted without adding any noise or distortion. We do use lossy algorithms from time to time, but the only unavoidably non-ideal steps are digitization and reconstruction, where digital has to interface with all of that messy analog. Messy or not, modern conversion stages are very, very good. By the standards of our ears, we can consider them practically lossless as well.

With a little extra hardware, then, most of which is now small and inexpensive due to our modern industrial infrastructure, digital audio is the clear winner over analog. So let us then go about storing it, copying it, manipulating it, and transmitting it.

Going deeper…

- Wikipedia: Nyquist–Shannon sampling theorem

- MIT OpenCourseWare Lecture notes from 6.003 signals and systems.

- Wikipedia: The history of analog filters such as the RC low-pass shown connected to the spectrum analyzer in the video.

Raw (Digital Audio) Meat

Pulse Code Modulation is the most common representation for raw audio.

Other practical representations do exist: for example, the Sigma-Delta coding used by the SACD, which is a form of Pulse Density Modulation. That said, Pulse Code Modulation is far and away dominant, mainly because it's so mathematically convenient. An audio engineer can spend an entire career without running into anything else.

PCM encoding can be characterized in three parameters, making it easy to account for every possible PCM variant with mercifully little hassle.

Sample Rate

The first parameter is the sampling rate. The highest frequency an encoding can represent is called the Nyquist Frequency. The Nyquist frequency of PCM happens to be exactly half the sampling rate. Therefore, the sampling rate directly determines the highest possible frequency in the digitized signal.

Analog telephone systems traditionally band-limited voice channels to just under 4kHz, so digital telephony and most classic voice applications use an 8kHz sampling rate: the minimum sampling rate necessary to capture the entire bandwidth of a 4kHz channel. This is what an 8kHz sampling rate sounds like—a bit muffled but perfectly intelligible for voice. This is the lowest sampling rate that's ever been used widely in practice.

From there, as power, and memory, and storage increased, consumer computer hardware went to offering 11, and then 16, and then 22, and then 32kHz sampling. With each increase in the sampling rate and the Nyquist frequency, it's obvious that the high end becomes a little clearer and the sound more natural.

The Compact Disc uses a 44.1kHz sampling rate, which is again slightly better than 32kHz, but the gains are becoming less distinct. 44.1kHz is a bit of an oddball choice, especially given that it hadn't been used for anything prior to the compact disc, but the huge success of the CD has made it a common rate.

The most common hi-fidelity sampling rate aside from the CD is 48kHz. There's virtually no audible difference between the two. This video, or at least the original version of it, was shot and produced with 48kHz audio, which happens to be the original standard for high-fidelity audio with video.

Super-hi-fidelity sampling rates of 88, and 96, and 192kHz have also appeared. The reason for the sampling rates beyond 48kHz isn't to extend the audible high frequencies further. It's for a different reason.

Stepping back for just a second, the French mathematician Jean Baptiste Joseph Fourier showed that we can also think of signals like audio as a set of component frequencies. This frequency-domain representation is equivalent to the time representation; the signal is exactly the same, we're just looking at it a different way. Here we see the frequency-domain representation of a hypothetical analog signal we intend to digitally sample.

The sampling theorem tells us two essential things about the sampling process. First, that a digital signal can't represent any frequencies above the Nyquist frequency. Second, and this is the new part, if we don't remove those frequencies with a low-pass filter before sampling, the sampling process will fold them down into the representable frequency range as aliasing distortion.

Aliasing, in a nutshell, sounds freakin' awful, so it's essential to remove any beyond-Nyquist frequencies before sampling and after reconstruction.

Human frequency perception is considered to extend to about 20kHz. In

44.1 or 48kHz sampling, the low pass before the sampling stage has to

be extremely sharp to avoid cutting any audible frequencies below

20kHz but still not allow frequencies above the Nyquist to leak

forward into the sampling process. This is a difficult filter to

build, and no practical filter succeeds completely. If the sampling

rate is 96kHz or 192kHz on the other hand, the low pass has an extra

octave or two for its transition band. This is a much easier filter to

build. Sampling rates beyond 48kHz are actually one of those messy

analog stage compromises.

Sample Format

The second fundamental PCM parameter is the sample format; that is, the format of each digital number. A number is a number, but a number can be represented in bits a number of different ways.

Early PCM was eight-bit linear, encoded as an unsigned byte. The dynamic range is limited to about 50dB and the quantization noise, as you can hear, is pretty severe. Eight-bit audio is vanishingly rare today.

Digital telephony typically uses one of two related non-linear eight bit encodings called A-law and μ-law. These formats encode a roughly 14 bit dynamic range into eight bits by spacing the higher amplitude values farther apart. A-law and mu-law obviously improve quantization noise compared to linear 8-bit, and voice harmonics especially hide the remaining quantization noise well. All three eight-bit encodings (linear, A-law, and mu-law) are typically paired with an 8kHz sampling rate, though I'm demonstrating them here at 48kHz.

Most modern PCM uses 16- or 24-bit two's-complement signed integers to encode the range from negative infinity to zero decibels in 16 or 24 bits of precision. The maximum absolute value corresponds to zero decibels. As with all the sample formats so far, signals beyond zero decibels, and thus beyond the maximum representable range, are clipped.

In mixing and mastering, it's not unusual to use floating-point numbers for PCM instead of integers. A 32 bit IEEE754 float, that's the normal kind of floating point you see on current computers, has 24 bits of resolution, but a seven bit floating-point exponent increases the representable range. Floating point usually represents zero decibels as +/-1.0, and because floats can obviously represent considerably beyond that, temporarily exceeding zero decibels during the mixing process doesn't cause clipping. Floating-point PCM takes up more space, so it tends to be used only as an intermediate production format.

Lastly, most general purpose computers still read and write data in octet bytes, so it's important to remember that samples bigger than eight bits can be in big- or little-endian order, and both endiannesses are common. For example, Microsoft WAV files are little-endian, and Apple AIFC files tend to be big-endian. Be aware of it.

Channels

The third PCM parameter is the number of channels. The convention in raw PCM is to encode multiple channels by interleaving the samples of each channel together into a single stream. Straightforward and extensible.

Done!

And that's it! That describes every PCM representation ever. Done. Digital audio is so easy! There's more to do of course, but at this point we've got a nice useful chunk of audio data, so let's get some video too.

Going deeper…

- Wikipedia's article on filter roll-off, to learn why it's hard to build analog filters with a very narrow transition band between the passband and the stopband. Filters that achieve such hard edges often do so at the expense of increased ripple and phase distortion.

- Some more minutiae about PCM in practice.

- DPCM and ADPCM, simple audio codecs loosely inspired by PCM.

Video Vegetables (they're good for you!)